bigr_epicure_pipeline

Snakemake pipeline for Epicure analyses: Chip-Seq, Atac-Seq, Cut&Tag, Cut&Run, MeDIP-Seq, 8-OxoG-Seq

Summary

Usage

Installation (following Snakemake-Workflows guidelines)

note: This action has already been done for you if you work at Gustave Roussy. See at the end of this section

- Install snakemake and snakedeploy with mamba package manager. Given that Mamba is installed, run:

mamba create -c conda-forge -c bioconda --name bigr_epicure_pipeline snakemake snakedeploy pandas

- Ensure your conda environment is activated in your bash terminal:

conda shell.bash activate bigr_epicure_pipeline

Alternatively, if you work at Gustave Roussy, you can use our shared environment:

conda shell.bash activate /mnt/beegfs/pipelines/unofficial-snakemake-wrappers/shared_conda/bigr_epicure_pipeline

Deployment (following Snakemake-Workflows guidelines)

note: This action has been made easier for you if you work at Gustave Roussy. See at the end of this section

Given that Snakemake and Snakedeploy are installed and available (see Installation ), the workflow can be deployed as follows.

- Go to your working directory:

cd path/to/my/project

- Deploy workflow:

snakedeploy deploy-workflow https://github.com/tdayris/bigr_epicure_pipeline . --tag v0.4.0

Snakedeploy will create two folders

workflow

and

config

. The former contains the deployment of the chosen workflow as a

Snakemake module

, the latter contains configuration files which will be modified in the next step in order to configure the workflow to your needs. Later, when executing the workflow, Snakemake will automatically find the main

Snakefile

in the

workflow

subfolder.

- Consider to put the exact version of the pipeline and all modifications you might want perform under version control. e.g. by managing it via a (private) Github repository

Configure workflow (following Snakemake-Workflows guidelines)

See dedicated

config/README.md

file for dedicated explanations of all options and consequences.

Run workflow (following Snakemake-Workflows guidelines)

note: This action has been made easier for you if you work at Gustave Roussy. See at the end of this section

Given that the workflow has been properly deployed and configured, it can be executed as follows.

Fow running the workflow while deploying any necessary software via conda (using the Mamba package manager by default), run Snakemake with

snakemake --cores all --use-conda

Alternatively, for users at Gustave Roussy, you may use:

bash workflow/scripts/misc/bigr_launcher.sh

Snakemake will automatically detect the main

Snakefile

in the

workflow

subfolder and execute the workflow module that has been defined by the deployment in step 2.

For further options, e.g. for cluster and cloud execution, see the docs .

Generate report (following Snakemake-Workflows guidelines)

After finalizing your data analysis, you can automatically generate an interactive visual HTML report for inspection of results together with parameters and code inside of the browser via

snakemake --report report.zip

The resulting

report.zip

file can be passed on to collaborators, provided as a supplementary file in publications, or uploaded to a service like

Zenodo

in order to obtain a citable

DOI

.

Open-on-demand Flamingo

This section describes the pipeline usage through Open-on-demand web-interface at Flamingo, Gustave Roussy.

Open-on-demand job composer

Log-in to Flamingo Open-On-Demand web-page using your institutional user name and password. On the main page, click on "Job", then, in the drop-down menu, hit "Job Composer"

Create a new template

On the top of the new page, in the grey bar menu, hit "Template". Then use the light blue button "+ New Template".

An empty template for appears:

Fill the "Path" section with the following path:

/mnt/beegfs/pipelines/bigr_epicure_pipeline/open-on-demand-templates

. It is very important that you do not change this path. Fill the rest of the template as you like.

Hit the white "save" button at the bottom left of the form.

Edit the template with your current work

Back to the "Template" page we saw earlier, a new line should be displayed with our new template. Click on the line and the right section of the page should display dedicated informations about this template.

Name it as you like, but do not create a job now! Scroll down and hit the white button: "Open Dir"

Edit

bigr_launcher.sh

Click on the file

bigr_launcher.sh

and hit the white button: "Edit".

Follow comments in grey and edit the line 11 to 30 as you'd like. You can refer to this page to know the latest version of this pipeline. You should always be using the latest version of this pipeline when starting a new project.

Launch a new job

Back to the Job Composer window, you can now click "+ New Job", "From Template" and select the template we just made.

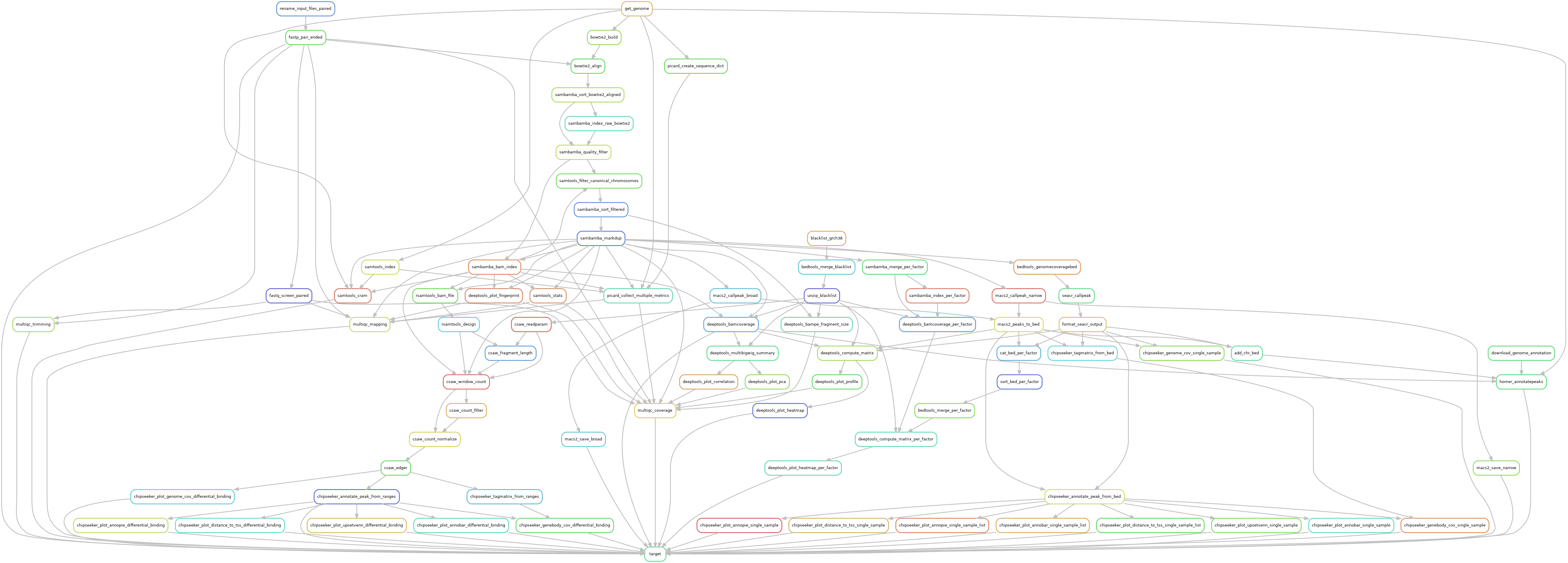

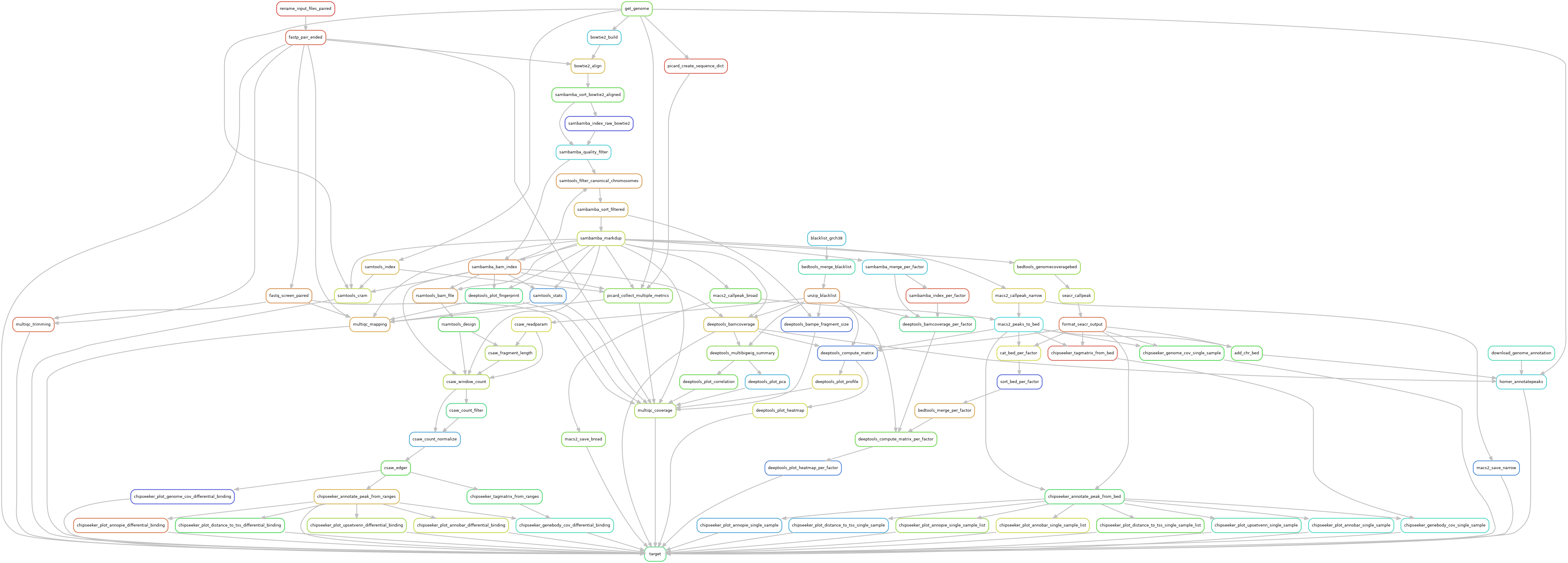

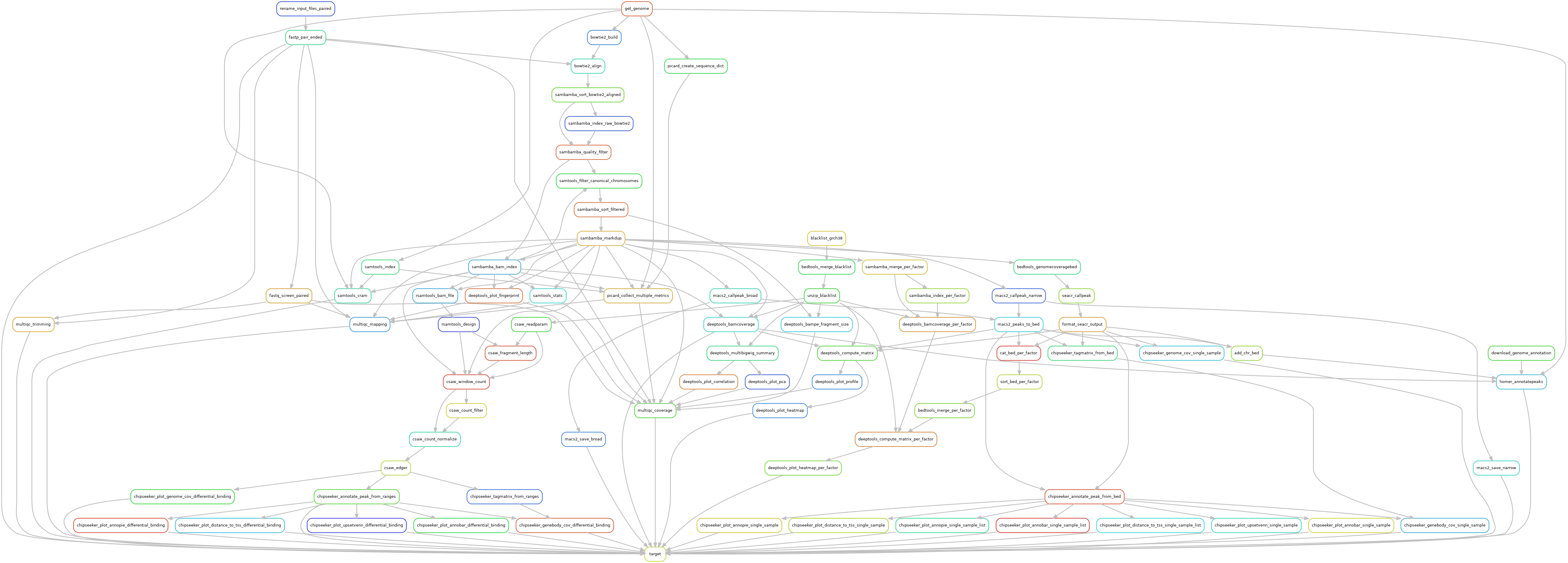

Pipeline description

note: The following steps may not be perform in that exact order.

Pre-pocessing

| Step | Tool | Documentation | Reason |

|---|---|---|---|

| Download genome sequence | curl | Snakemake-Wrapper: download-sequence | Ensure genome sequence are consistent in Epicure analyses |

| Download genome annotation | curl | Snakemake-Wrapper: download-annotation | Ensure genome annotation are consistent in Epicure analyses |

| Download blacklised regions | wget | Ensure blacklist regions are consistent in Epicure analyses | |

| Trimming + QC | Fastp | Snakemake-Wrapper: fastp | Perform read quality check and corrections, UMI, adapter removal, QC before and after trimming |

| Quality Control | FastqScreen | Snakemake-Wrapper: fastq-screen | Perform library quality check |

| Download fastq screen indexes | wget | Ensure fastq_screen reports are the same among Epicure analyses |

Read mapping

| Step | Tool | Documentation | Reason |

|---|---|---|---|

| Indexation | Bowtie2 | Snakemake-Wrapper: bowtie2-build | Index genome for up-coming read mapping |

| Mapping | Bowtie2 | Snakemake-Wrapper: bowtie2-align | Align sequenced reads on the genome |

| Filtering | Sambamba | Snakemake-Wrapper: sambamba-sort | Sort alignment over chromosome position, this reduce up-coming required computing resources, and reduce alignment-file size. |

| Filtering | Sambamba | Snakemake-Wrapper: sambamba-view | Remove non-canonical chromosomes and mapping belonging to mitochondrial chromosome. Remove low quality mapping. |

| Filtering | Sambamba | Snakemake-Wrapper: sambamba-markdup | Remove sequencing duplicates. |

| Filtering | DeepTools | Snakemake-Wrapper: sambamba-sort | For Atac-seq only. Reads on the positive strand should be shifted 4 bp to the right and reads on the negative strand should be shifted 5 bp to the left as in Buenrostro et al. 2013 . |

| Archive | Sambamba | Snakemake-Wrapper: sambamba-view | Compress alignment fil in CRAM format in order to reduce archive size. |

| Quality Control | Picard | Snakemake-Wrapper: picard-collect-multiple-metrics | Summarize alignments, GC bias, insert size metrics, and quality score distribution. |

| Quality Control | Samtools | Snakemake-Wrapper: samtools-stats | Summarize alignment metrics. Performed before and after mapping-post-processing in order to highlight possible bias. |

| Quality Control | DeepTools | Snakemake-Wrapper: deeptools-fingerprint | Control imuno precipitation signal specificity. |

| GC-bias correction | DeepTools | Official Documentation | Filter regions with abnormal GC-Bias as decribed in Benjamini & Speed, 2012 . OxiDIP-Seq only. |

Coverage

| Step | Tool | Documentation | Reason |

|---|---|---|---|

| Coverage | DeepTools | Snakemake-Wrapper: deeptools-bamcoverage | Compute genome coverage, normalized to 1M reads |

| Coverage | MEDIPS | Official documentation | Compute genome coverage with CpG density correction using MEDIPS (MeDIP-Seq only) |

| Scaled-Coverage | DeepTools | Snakemake-Wrapper: deeptools-computematrix | Calculate scores per genomic regions. Used for heatmaps and profile coverage plots. |

| Depth | DeepTools | Snakemake-Wrapper: deeptools-plotheatmap | Assess the sequencing depth of given samples |

| Coverage | CSAW | Official documentation | Count and filter reads over sliding windows. |

| Filter | CSAW | Official documentation | Filter reads over background signal. |

| Quality Control | DeepTools | Snakemake-Wrapper: deeptools-plotprofile | Plot profile scores over genomic regions |

| Quality Control | DeepTools | Official Documentation | Plot principal component analysis (PCA) over bigwig coverage to assess sample dissimilarity. |

| Quality Control | DeepTools | Official Documentation | Plot sample correlation based on the coverage analysis. |

| Coverage | BedTools | Snakemake-Wrapper: bedtools-genomecov | Estimate raw coverage over the genome |

Peak-Calling

| Step | Tool | Documentation | Reason |

|---|---|---|---|

| Peak-Calling | Mac2 | Snakemake-Wrapper: macs2-callpeak | Search for significant peaks |

| Heatmap | DeepTools | Snakemake-Wrapper: deeptools-plotheatmap | Plot heatmap and peak coverage among samples |

| FRiP score | Manually assessed | Compute Fragment of Reads in Peaks to assess signal/noise ratio. | |

| Peak-Calling | SEACR | Official Documentation | Search for significant peaks in Cut&Tag or Cut&Run |

Differential Peak Calling

| Step | Tool | Documentation | Reason |

|---|---|---|---|

| Peak-Calling | MEDIPS | Official documentation | Search for significant variation in peak coverage with EdgeR (MeDIP-Seq only) |

| Normalization | CSAW | Official documentation | Correct composition bias and variable library sizes. |

| Differential Binding | CSAW-EdgeR | Official documentation | Call for differentially covered windows |

Peak annotation

| Step | Tool | Documentation | Reason |

|---|---|---|---|

| Annotation | MEDIPS | Official Documentation | Annotate peaks with Ensembl-mart through MEDIPS (MeDIP-Seq only) |

| Annotation | Homer | Snakemake-Wrapper: homer-annotatepeaks | Performing peak annotation to associate peaks with nearby genes. |

| Annotation | CHiP seeker | Official Documentation | Performing peak annotation to associate peaks with nearby genes. |

| Quality Control | CHiP Seeker | Official Documentation | Perform region-wise barplot graph. |

| Quality Control | CHiP Seeker | Official Documentation | Perform region-wise upset-plot. |

| Quality Control | CHiP Seeker | Official Documentation | Visualize distribution of TF-binding loci relative to TSS |

Motifs

| Step | Tool | Documentation | Reason |

|---|---|---|---|

| De-novo | Homer | Official Documentation | Perform de novo motifs discovery. |

Material and Methods

ChIP-Seq

Reads are trimmed using

fastp

version

0.23.2

, using default parameters. Trimmed reads are mapped over the genome of interest defined in the configuration file, using

bowtie2

version

2.5.1

, using

--very-sensitive

parameter to increase mapping specificity.

Mapped reads with a quality lower than 30 are dropped out using

Sambamba

version

1.0

with parameter

--filter 'mapping_quality >= 30'

. In case of pair-ended library, orphan reads a dropped out using

--filter 'not (unmapped or mate_is_unmapped)'

in

Sambamba

version

1.0

. Remaining reads are filtered over canonical chromosomes, using

Samtools

version

1.17

. Mitochondrial chromosome is not considered as a canonical chromosome, that being so, mitochondrial reads are dropped out. In case regions of interest are defined over the genome,

BedTools

version

2.31.0

with

-wa -sorted

as optional parameter to keep mapped reads that overlap the regions of interest by, at least, one base. Duplicated reads were filtered out, using

Sambamba

version

1.0

using the optional parameter

--remove-duplicates --overflow-list-size 600000

.

A quality control is made both before and after these filters to ensure no valuable data is lost. These quality controls are made using Picard version 3.0.0 , and Samtools version 1.17 .

Genome coverage was assessed with

DeepTools

version

3.5.2

. Chromosome X and Y were ignored in the RPKM normalization in order to reduce noise the final result. using the following optional parameters

--normalizeUsing RPKM --binSize 5 --skipNonCoveredRegions --ignoreForNormalization chrX chrM chrY

. The same tool was used to plot both quality controls and peak coverage heatmaps.

Single-sample peak calling was performed by

Macs2

version

2.2.7.1

using both broad and narrow options. Pair-ended libraries recieved the

--format BAMPE

parameters, while single-ended libraries used estimated fragment size provided in input.

The peak annotation was done using ChIPSeeker version 1.34.0 , using Org.eg.db annotations version 3.16.0 .

De novo

motif discovery was made with

Homer

version

4.11

over called peaks, using the following optional parameters

-size 200 -homer2

over the genome identified in the configuration file (GRCh38 or mm10). The motifs were used for further annotation of peaks with Homer.

Differential binding analysis was performed using

CSAW

version

1.32.0

. Input signal (if available) was used to find signal of interest over background noise. Or else, a large binning was defined over mappable genome regions to define a background noise track to use as an input signal. In any case, a log2 of 1.1 between the background and the sample of interest was used as a threshold value to identify regions of interest. Data were normalized over efficiency biases as described in

CSAW documentation

, since libraries of the same size can still have composition bias. Differential binded sites were called using EdgeR over the statistical formulas described in the configuration file. The

prior N

was defined over 20 degrees of freedom in the GLM test. FDR values from EdgeR were corrected using a region merge accross gene annotations to account for related binding sites. Differentially bounded regions were annotated as if they were peaks, using the same methods, and the same parameters.

Additional quality controls were made using FastqScreen version 0.15.3 . Complete quality reports were built using MultiQC aggregation tool and in-house scripts.

The whole pipeline was powered by Snakemake version 7.26.0 and the Snakemake-Wrappers version v2.0.0 .

MNase-seq

Reads are trimmed using

fastp

version

0.23.2

, using default parameters. Trimmed reads are mapped over the genome of interest defined in the configuration file, using

bowtie2

version

2.5.1

, using

--very-sensitive

parameter to increase mapping specificity.

Mapped reads with a quality lower than 30 are dropped out using

Sambamba

version

1.0

with parameter

--filter 'mapping_quality >= 30'

. In case of pair-ended library, orphan reads a dropped out using

--filter 'not (unmapped or mate_is_unmapped)'

in

Sambamba

version

1.0

. Remaining reads are filtered over canonical chromosomes, using

Samtools

version

1.17

. Mitochondrial chromosome is not considered as a canonical chromosome, that being so, mitochondrial reads are dropped out. In case regions of interest are defined over the genome,

BedTools

version

2.31.0

with

-wa -sorted

as optional parameter to keep mapped reads that overlap the regions of interest by, at least, one base. Duplicated reads were filtered out, using

Sambamba

version

1.0

using the optional parameter

--remove-duplicates --overflow-list-size 600000

.

A quality control is made both before and after these filters to ensure no valuable data is lost. These quality controls are made using Picard version 3.0.0 , and Samtools version 1.17 .

Genome coverage was assessed with

DeepTools

version

3.5.2

. Chromosome X and Y were ignored in the RPKM normalization in order to reduce noise the final result. MNase specificity was also taken into account using the following optional parameters

--normalizeUsing RPKM --binSize 5 --skipNonCoveredRegions --ignoreForNormalization chrX chrM chrY --MNase

. The same tool was used to plot both quality controls and peak coverage heatmaps.

Single-sample peak calling was performed by

Macs2

version

2.2.7.1

using both broad and narrow options. Pair-ended libraries recieved the

--format BAMPE

parameters, while single-ended libraries used estimated fragment size provided in input.

The peak annotation was done using ChIPSeeker version 1.34.0 , using Org.eg.db annotations version 3.16.0 .

De novo

motif discovery was made with

Homer

version

4.11

over called peaks, using the following optional parameters

-size 200 -homer2

over the genome identified in the configuration file (GRCh38 or mm10). The motifs were used for further annotation of peaks with Homer.

Differential binding analysis was performed using

CSAW

version

1.32.0

. Input signal (if available) was used to find signal of interest over background noise. Or else, a large binning was defined over mappable genome regions to define a background noise track to use as an input signal. In any case, a log2 of 1.1 between the background and the sample of interest was used as a threshold value to identify regions of interest. Data were normalized over efficiency biases as described in

CSAW documentation

, since libraries of the same size can still have composition bias. Differential binded sites were called using EdgeR over the statistical formulas described in the configuration file. The

prior N

was defined over 20 degrees of freedom in the GLM test. FDR values from EdgeR were corrected using a region merge accross gene annotations to account for related binding sites. Differentially bounded regions were annotated as if they were peaks, using the same methods, and the same parameters.

Additional quality controls were made using FastqScreen version 0.15.3 . Complete quality reports were built using MultiQC aggregation tool and in-house scripts.

The whole pipeline was powered by Snakemake version 7.26.0 and the Snakemake-Wrappers version v2.0.0 .

Gro-seq

Reads are trimmed using

fastp

version

0.23.2

, using default parameters. Trimmed reads are mapped over the genome of interest defined in the configuration file, using

bowtie2

version

2.5.1

, using

--very-sensitive

parameter to increase mapping specificity.

Mapped reads with a quality lower than 30 are dropped out using

Sambamba

version

1.0

with parameter

--filter 'mapping_quality >= 30'

. In case of pair-ended library, orphan reads a dropped out using

--filter 'not (unmapped or mate_is_unmapped)'

in

Sambamba

version

1.0

. Remaining reads are filtered over canonical chromosomes, using

Samtools

version

1.17

. Mitochondrial chromosome is not considered as a canonical chromosome, that being so, mitochondrial reads are dropped out. In case regions of interest are defined over the genome,

BedTools

version

2.31.0

with

-wa -sorted

as optional parameter to keep mapped reads that overlap the regions of interest by, at least, one base. Duplicated reads were filtered out, using

Sambamba

version

1.0

using the optional parameter

--remove-duplicates --overflow-list-size 600000

.

A quality control is made both before and after these filters to ensure no valuable data is lost. These quality controls are made using Picard version 3.0.0 , and Samtools version 1.17 .

Genome coverage was assessed with

DeepTools

version

3.5.2

. Chromosome X and Y were ignored in the RPKM normalization in order to reduce noise the final result. Gro-seq specificity was also taken into account using the following optional parameters

--normalizeUsing RPKM --binSize 5 --skipNonCoveredRegions --ignoreForNormalization chrX chrM chrY --Offset 12

. The same tool was used to plot both quality controls and peak coverage heatmaps.

Single-sample peak calling was performed by

Macs2

version

2.2.7.1

using both broad and narrow options. Pair-ended libraries recieved the

--format BAMPE

parameters, while single-ended libraries used estimated fragment size provided in input.

The peak annotation was done using ChIPSeeker version 1.34.0 , using Org.eg.db annotations version 3.16.0 .

De novo

motif discovery was made with

Homer

version

4.11

over called peaks, using the following optional parameters

-size 200 -homer2

over the genome identified in the configuration file (GRCh38 or mm10). The motifs were used for further annotation of peaks with Homer.

Differential binding analysis was performed using

CSAW

version

1.32.0

. Input signal (if available) was used to find signal of interest over background noise. Or else, a large binning was defined over mappable genome regions to define a background noise track to use as an input signal. In any case, a log2 of 1.1 between the background and the sample of interest was used as a threshold value to identify regions of interest. Data were normalized over efficiency biases as described in

CSAW documentation

, since libraries of the same size can still have composition bias. Differential binded sites were called using EdgeR over the statistical formulas described in the configuration file. The

prior N

was defined over 20 degrees of freedom in the GLM test. FDR values from EdgeR were corrected using a region merge accross gene annotations to account for related binding sites. Differentially bounded regions were annotated as if they were peaks, using the same methods, and the same parameters.

Additional quality controls were made using FastqScreen version 0.15.3 . Complete quality reports were built using MultiQC aggregation tool and in-house scripts.

The whole pipeline was powered by Snakemake version 7.26.0 and the Snakemake-Wrappers version v2.0.0 .

Ribo-seq

Reads are trimmed using

fastp

version

0.23.2

, using default parameters. Trimmed reads are mapped over the genome of interest defined in the configuration file, using

bowtie2

version

2.5.1

, using

--very-sensitive

parameter to increase mapping specificity.

Mapped reads with a quality lower than 30 are dropped out using

Sambamba

version

1.0

with parameter

--filter 'mapping_quality >= 30'

. In case of pair-ended library, orphan reads a dropped out using

--filter 'not (unmapped or mate_is_unmapped)'

in

Sambamba

version

1.0

. Remaining reads are filtered over canonical chromosomes, using

Samtools

version

1.17

. Mitochondrial chromosome is not considered as a canonical chromosome, that being so, mitochondrial reads are dropped out. In case regions of interest are defined over the genome,

BedTools

version

2.31.0

with

-wa -sorted

as optional parameter to keep mapped reads that overlap the regions of interest by, at least, one base. Duplicated reads were filtered out, using

Sambamba

version

1.0

using the optional parameter

--remove-duplicates --overflow-list-size 600000

.

A quality control is made both before and after these filters to ensure no valuable data is lost. These quality controls are made using Picard version 3.0.0 , and Samtools version 1.17 .

Genome coverage was assessed with

DeepTools

version

3.5.2

. Chromosome X and Y were ignored in the RPKM normalization in order to reduce noise the final result. Ribo-seq specificity was also taken into account using the following optional parameters

--normalizeUsing RPKM --binSize 5 --skipNonCoveredRegions --ignoreForNormalization chrX chrM chrY --Offset 15

. The same tool was used to plot both quality controls and peak coverage heatmaps.

Single-sample peak calling was performed by

Macs2

version

2.2.7.1

using both broad and narrow options. Pair-ended libraries recieved the

--format BAMPE

parameters, while single-ended libraries used estimated fragment size provided in input.

The peak annotation was done using ChIPSeeker version 1.34.0 , using Org.eg.db annotations version 3.16.0 .

De novo

motif discovery was made with

Homer

version

4.11

over called peaks, using the following optional parameters

-size 200 -homer2

over the genome identified in the configuration file (GRCh38 or mm10). The motifs were used for further annotation of peaks with Homer.

Differential binding analysis was performed using

CSAW

version

1.32.0

. Input signal (if available) was used to find signal of interest over background noise. Or else, a large binning was defined over mappable genome regions to define a background noise track to use as an input signal. In any case, a log2 of 1.1 between the background and the sample of interest was used as a threshold value to identify regions of interest. Data were normalized over efficiency biases as described in

CSAW documentation

, since libraries of the same size can still have composition bias. Differential binded sites were called using EdgeR over the statistical formulas described in the configuration file. The

prior N

was defined over 20 degrees of freedom in the GLM test. FDR values from EdgeR were corrected using a region merge accross gene annotations to account for related binding sites. Differentially bounded regions were annotated as if they were peaks, using the same methods, and the same parameters.

Additional quality controls were made using FastqScreen version 0.15.3 . Complete quality reports were built using MultiQC aggregation tool and in-house scripts.

The whole pipeline was powered by Snakemake version 7.26.0 and the Snakemake-Wrappers version v2.0.0 .

Atac-seq

Reads are trimmed using

fastp

version

0.23.2

, using default parameters. Trimmed reads are mapped over the genome of interest defined in the configuration file, using

bowtie2

version

2.5.1

, using

--very-sensitive

parameter to increase mapping specificity.

Mapped reads with a quality lower than 30 are dropped out using

Sambamba

version

1.0

with parameter

--filter 'mapping_quality >= 30'

. In case of pair-ended library, orphan reads a dropped out using

--filter 'not (unmapped or mate_is_unmapped)'

in

Sambamba

version

1.0

. Remaining reads are filtered over canonical chromosomes, using

Samtools

version

1.17

. Mitochondrial chromosome is not considered as a canonical chromosome, that being so, mitochondrial reads are dropped out. In case regions of interest are defined over the genome,

BedTools

version

2.31.0

with

-wa -sorted

as optional parameter to keep mapped reads that overlap the regions of interest by, at least, one base. Duplicated reads were filtered out, using

Sambamba

version

1.0

using the optional parameter

--remove-duplicates --overflow-list-size 600000

. Remaining reads were shifted as described in Buenrostro et al. 2013 : reads on the positive strand were shifted 4 bp to the right and reads on the negative were be shifted 5 bp to the left using

DeepTools

version

3.5.2

and the optional parameter

--ATACShift

.

A quality control is made both before and after these filters to ensure no valuable data is lost. These quality controls are made using Picard version 3.0.0 , and Samtools version 1.17 .

Genome coverage was assessed with

DeepTools

version

3.5.2

. Chromosome X and Y were ignored in the RPKM normalization in order to reduce noise the final result. using the following optional parameters

--normalizeUsing RPKM --binSize 5 --skipNonCoveredRegions --ignoreForNormalization chrX chrM chrY

. The same tool was used to plot both quality controls and peak coverage heatmaps.

Single-sample peak calling was performed by

Macs2

version

2.2.7.1

using both broad and narrow options. Pair-ended libraries recieved the

--format BAMPE

parameters, while single-ended libraries used estimated fragment size provided in input.

The peak annotation was done using ChIPSeeker version 1.34.0 , using Org.eg.db annotations version 3.16.0 .

De novo

motif discovery was made with

Homer

version

4.11

over called peaks, using the following optional parameters

-size 200 -homer2

over the genome identified in the configuration file (GRCh38 or mm10). The motifs were used for further annotation of peaks with Homer.

Differential binding analysis was performed using

CSAW

version

1.32.0

. A large binning was defined over mappable genome regions to define a background noise track to use as an input signal. In any case, a log2 of 1.1 between the background and the sample of interest was used as a threshold value to identify regions of interest. Data were normalized over efficiency biases as described in

CSAW documentation

, since libraries of the same size can still have composition bias. Differential binded sites were called using EdgeR over the statistical formulas described in the configuration file. The

prior N

was defined over 20 degrees of freedom in the GLM test. FDR values from EdgeR were corrected using a region merge accross gene annotations to account for related binding sites. Differentially bounded regions were annotated as if they were peaks, using the same methods, and the same parameters.

Additional quality controls were made using FastqScreen version 0.15.3 . Complete quality reports were built using MultiQC aggregation tool and in-house scripts.

The whole pipeline was powered by Snakemake version 7.26.0 and the Snakemake-Wrappers version v2.0.0 .

Cut&Tag

Reads are trimmed using

fastp

version

0.23.2

, using default parameters. Trimmed reads are mapped over the genome of interest defined in the configuration file, using

bowtie2

version

2.5.1

, using

--very-sensitive

parameter to increase mapping specificity.

Mapped reads with a quality lower than 30 are dropped out using

Sambamba

version

1.0

with parameter

--filter 'mapping_quality >= 30'

. In case of pair-ended library, orphan reads a dropped out using

--filter 'not (unmapped or mate_is_unmapped)'

in

Sambamba

version

1.0

. Remaining reads are filtered over canonical chromosomes, using

Samtools

version

1.17

. Mitochondrial chromosome is not considered as a canonical chromosome, that being so, mitochondrial reads are dropped out. In case regions of interest are defined over the genome,

BedTools

version

2.31.0

with

-wa -sorted

as optional parameter to keep mapped reads that overlap the regions of interest by, at least, one base. Duplicated reads were

not

filtered out, but marked as duplicates, using

Sambamba

version

1.0

using the optional parameter

--overflow-list-size 600000

.

A quality control is made both before and after these filters to ensure no valuable data is lost. These quality controls are made using Picard version 3.0.0 , and Samtools version 1.17 .

Genome coverage was assessed with

DeepTools

version

3.5.2

. Chromosome X and Y were ignored in the RPKM normalization in order to reduce noise the final result. using the following optional parameters

--normalizeUsing RPKM --binSize 5 --skipNonCoveredRegions --ignoreForNormalization chrX chrM chrY --ignoreDuplicates

. Reads marked as duplicates were treated as normal reads. The same tool was used to plot both quality controls and peak coverage heatmaps.

Single-sample peak calling was performed by

Macs2

version

2.2.7.1

using both broad and narrow options. Pair-ended libraries recieved the

--format BAMPE

parameters, while single-ended libraries used estimated fragment size provided in input. Alongside with Macs2,

SEACR

version

1.3

was used to perform single-sample peak-calling using parameters hinted in the original publication of SEACR.

The peak annotation was done using ChIPSeeker version 1.34.0 , using Org.eg.db annotations version 3.16.0 .

De novo

motif discovery was made with

Homer

version

4.11

over called peaks, using the following optional parameters

-size 200 -homer2

over the genome identified in the configuration file (GRCh38 or mm10). The motifs were used for further annotation of peaks with Homer.

Differential binding analysis was performed using

CSAW

version

1.32.0

. Input signal (if available) was used to find signal of interest over background noise. Or else, a large binning was defined over mappable genome regions to define a background noise track to use as an input signal. In any case, a log2 of 1.1 between the background and the sample of interest was used as a threshold value to identify regions of interest. Data were normalized over efficiency biases as described in

CSAW documentation

, since libraries of the same size can still have composition bias. Differential binded sites were called using EdgeR over the statistical formulas described in the configuration file. The

prior N

was defined over 20 degrees of freedom in the GLM test. FDR values from EdgeR were corrected using a region merge accross gene annotations to account for related binding sites. Through the whole process, reads flagged as duplicates are treated as any other read. Differentially bounded regions were annotated as if they were peaks, using the same methods, and the same parameters.

Additional quality controls were made using FastqScreen version 0.15.3 . Complete quality reports were built using MultiQC aggregation tool and in-house scripts.

The whole pipeline was powered by Snakemake version 7.26.0 and the Snakemake-Wrappers version v2.0.0 .

Cut&Run

Reads are trimmed using

fastp

version

0.23.2

, using default parameters. Trimmed reads are mapped over the genome of interest defined in the configuration file, using

bowtie2

version

2.5.1

, using

--very-sensitive

parameter to increase mapping specificity.

Mapped reads with a quality lower than 30 are dropped out using

Sambamba

version

1.0

with parameter

--filter 'mapping_quality >= 30'

. In case of pair-ended library, orphan reads a dropped out using

--filter 'not (unmapped or mate_is_unmapped)'

in

Sambamba

version

1.0

. Remaining reads are filtered over canonical chromosomes, using

Samtools

version

1.17

. Mitochondrial chromosome is not considered as a canonical chromosome, that being so, mitochondrial reads are dropped out. In case regions of interest are defined over the genome,

BedTools

version

2.31.0

with

-wa -sorted

as optional parameter to keep mapped reads that overlap the regions of interest by, at least, one base. Duplicated reads were

not

filtered out, but marked as duplicates, using

Sambamba

version

1.0

using the optional parameter

--overflow-list-size 600000

.

A quality control is made both before and after these filters to ensure no valuable data is lost. These quality controls are made using Picard version 3.0.0 , and Samtools version 1.17 .

Genome coverage was assessed with

DeepTools

version

3.5.2

. Chromosome X and Y were ignored in the RPKM normalization in order to reduce noise the final result. using the following optional parameters

--normalizeUsing RPKM --binSize 5 --skipNonCoveredRegions --ignoreForNormalization chrX chrM chrY --ignoreDuplicates

. Reads marked as duplicates were treated as normal reads. The same tool was used to plot both quality controls and peak coverage heatmaps.

Single-sample peak calling was performed by

Macs2

version

2.2.7.1

using both broad and narrow options. Pair-ended libraries recieved the

--format BAMPE

parameters, while single-ended libraries used estimated fragment size provided in input.Alongside with Macs2,

SEACR

version

1.3

was used to perform single-sample peak-calling using parameters hinted in the original publication of SEACR.

The peak annotation was done using ChIPSeeker version 1.34.0 , using Org.eg.db annotations version 3.16.0 .

De novo

motif discovery was made with

Homer

version

4.11

over called peaks, using the following optional parameters

-size 200 -homer2

over the genome identified in the configuration file (GRCh38 or mm10). The motifs were used for further annotation of peaks with Homer.

Differential binding analysis was performed using

CSAW

version

1.32.0

. Input signal (if available) was used to find signal of interest over background noise. Or else, a large binning was defined over mappable genome regions to define a background noise track to use as an input signal. In any case, a log2 of 1.1 between the background and the sample of interest was used as a threshold value to identify regions of interest. Data were normalized over efficiency biases as described in

CSAW documentation

, since libraries of the same size can still have composition bias. Differential binded sites were called using EdgeR over the statistical formulas described in the configuration file. The

prior N

was defined over 20 degrees of freedom in the GLM test. FDR values from EdgeR were corrected using a region merge accross gene annotations to account for related binding sites. Through the whole process, reads flagged as duplicates are treated as any other read. Differentially bounded regions were annotated as if they were peaks, using the same methods, and the same parameters.

Additional quality controls were made using FastqScreen version 0.15.3 . Complete quality reports were built using MultiQC aggregation tool and in-house scripts.

The whole pipeline was powered by Snakemake version 7.26.0 and the Snakemake-Wrappers version v2.0.0 .

MeDIP-Seq

Reads are trimmed using

fastp

version

0.23.2

, using default parameters. Trimmed reads are mapped over the genome of interest defined in the configuration file, using

bowtie2

version

2.5.1

, using

--very-sensitive

parameter to increase mapping specificity.

Mapped reads with a quality lower than 30 are dropped out using

Sambamba

version

1.0

with parameter

--filter 'mapping_quality >= 30'

. In case of pair-ended library, orphan reads a dropped out using

--filter 'not (unmapped or mate_is_unmapped)'

in

Sambamba

version

1.0

. Remaining reads are filtered over canonical chromosomes, using

Samtools

version

1.17

. Mitochondrial chromosome is not considered as a canonical chromosome, that being so, mitochondrial reads are dropped out. In case regions of interest are defined over the genome,

BedTools

version

2.31.0

with

-wa -sorted

as optional parameter to keep mapped reads that overlap the regions of interest by, at least, one base. Duplicated reads were filtered out, using

Sambamba

version

1.0

using the optional parameter

--remove-duplicates --overflow-list-size 600000

.

A quality control is made both before and after these filters to ensure no valuable data is lost. These quality controls are made using Picard version 3.0.0 , and Samtools version 1.17 .

Genome coverage was assessed with

DeepTools

version

3.5.2

. Chromosome X and Y were ignored in the RPKM normalization in order to reduce noise the final result. using the following optional parameters

--normalizeUsing RPKM --binSize 5 --skipNonCoveredRegions --ignoreForNormalization chrX chrM chrY

. The same tool was used to plot both quality controls and peak coverage heatmaps.

Single-sample peak calling was performed by

Macs2

version

2.2.7.1

using both broad and narrow options. Pair-ended libraries recieved the

--format BAMPE

parameters, while single-ended libraries used estimated fragment size provided in input.

The peak annotation was done using ChIPSeeker version 1.34.0 , using Org.eg.db annotations version 3.16.0 .

De novo

motif discovery was made with

Homer

version

4.11

over called peaks, using the following optional parameters

-size 200 -homer2

over the genome identified in the configuration file (GRCh38 or mm10). The motifs were used for further annotation of peaks with Homer.

Differential binding analysis was performed with MEDIPS version 1.50.0 . If available, input signals were used to search signal of interest over the background noise. An adjusted p-value threshold of 0.1 was chosen to find significant signal over noise. A distance of 1 base was used to merge neighboring significant windows. Differentially binded sites were called using EdgeR and FDR were corrected using MEDIPS internal methods as defined in the official documentation. Differentially bounded regions were annotated as if they were peaks, using the same methods, and the same parameters.

Additional quality controls were made using FastqScreen version 0.15.3 . Complete quality reports were built using MultiQC aggregation tool and in-house scripts.

The whole pipeline was powered by Snakemake version 7.26.0 and the Snakemake-Wrappers version v2.0.0 .

OxiDIP-Seq (OG-Seq)

Reads are trimmed using

fastp

version

0.23.2

, using the following non-default parameter:

--poly_g_min_len 25

since we expect a higher number of base modifications. Trimmed reads are mapped over the genome of interest defined in the configuration file, using

bowtie2

version

2.5.1

, using default parameters.

Mapped reads with a quality lower than 30 are dropped out using

Sambamba

version

1.0

with parameter

--filter 'mapping_quality >= 30'

. In case of pair-ended library, orphan reads a dropped out using

--filter 'not (unmapped or mate_is_unmapped)'

in

Sambamba

version

1.0

. Remaining reads are filtered over canonical chromosomes, using

Samtools

version

1.17

. Mitochondrial chromosome is not considered as a canonical chromosome, that being so, mitochondrial reads are dropped out. In case regions of interest are defined over the genome,

BedTools

version

2.31.0

with

-wa -sorted

as optional parameter to keep mapped reads that overlap the regions of interest by, at least, one base. Duplicated reads were filtered out, using

Sambamba

version

1.0

using the optional parameter

--remove-duplicates --overflow-list-size 600000

. GC bias was estimated and corrected using

DeepTools

version

3.5.2

using default parameters.

A quality control is made both before and after these filters to ensure no valuable data is lost. These quality controls are made using Picard version 3.0.0 , and Samtools version 1.17 .

Genome coverage was assessed with

DeepTools

version

3.5.2

. Chromosome X and Y were ignored in the RPKM normalization in order to reduce noise the final result. using the following optional parameters

--normalizeUsing RPKM --binSize 5 --skipNonCoveredRegions --ignoreForNormalization chrX chrM chrY

. The same tool was used to plot both quality controls and peak coverage heatmaps.

Single-sample peak calling was performed by

Macs2

version

2.2.7.1

using both broad and narrow options. Pair-ended libraries recieved the

--format BAMPE

parameters, while single-ended libraries used estimated fragment size provided in input.

The peak annotation was done using ChIPSeeker version 1.34.0 , using Org.eg.db annotations version 3.16.0 .

De novo

motif discovery was made with

Homer

version

4.11

over called peaks, using the following optional parameters

-size 200 -homer2

over the genome identified in the configuration file (GRCh38 or mm10). The motifs were used for further annotation of peaks with Homer.

Differential binding analysis was performed using

CSAW

version

1.32.0

. Input signal (if available) was used to find signal of interest over background noise. Or else, a large binning was defined over mappable genome regions to define a background noise track to use as an input signal. In any case, a log2 of 1.1 between the background and the sample of interest was used as a threshold value to identify regions of interest. Data were normalized over efficiency biases as described in

CSAW documentation

, since libraries of the same size can still have composition bias, and 8oxoG changes the base composition. Differential binded sites were called using EdgeR over the statistical formulas described in the configuration file. The

prior N

was defined over 20 degrees of freedom in the GLM test. FDR values from EdgeR were corrected using a region merge accross gene annotations to account for related binding sites. Through the whole process, reads flagged as duplicates are treated as any other read. Differentially bounded regions were annotated as if they were peaks, using the same methods, and the same parameters.

Additional quality controls were made using FastqScreen version 0.15.3 . Complete quality reports were built using MultiQC aggregation tool and in-house scripts.

The whole pipeline was powered by Snakemake version 7.26.0 and the Snakemake-Wrappers version v2.0.0 .

Citations

-

Fastp:

Chen, Shifu, et al. "fastp: an ultra-fast all-in-one FASTQ preprocessor." Bioinformatics 34.17 (2018): i884-i890. -

Bowtie2:

Langmead, Ben, and Steven L. Salzberg. "Fast gapped-read alignment with Bowtie 2." Nature methods 9.4 (2012): 357-359. -

Sambamba:

Tarasov, Artem, et al. "Sambamba: fast processing of NGS alignment formats." Bioinformatics 31.12 (2015): 2032-2034. -

Ensembl:

Martin, Fergal J., et al. "Ensembl 2023." Nucleic Acids Research 51.D1 (2023): D933-D941. -

Snakemake:

Köster, Johannes, and Sven Rahmann. "Snakemake—a scalable bioinformatics workflow engine." Bioinformatics 28.19 (2012): 2520-2522. -

Atac-Seq:

Buenrostro, Jason D., et al. "Transposition of native chromatin for fast and sensitive epigenomic profiling of open chromatin, DNA-binding proteins and nucleosome position." Nature methods 10.12 (2013): 1213-1218. -

MeDIPS:

Lienhard, Matthias, et al. "MEDIPS: genome-wide differential coverage analysis of sequencing data derived from DNA enrichment experiments." Bioinformatics 30.2 (2014): 284-286. -

DeepTools:

Ramírez, Fidel, et al. "deepTools: a flexible platform for exploring deep-sequencing data." Nucleic acids research 42.W1 (2014): W187-W191. -

UCSC:

Nassar LR, Barber GP, Benet-Pagès A, Casper J, Clawson H, Diekhans M, Fischer C, Gonzalez JN, Hinrichs AS, Lee BT, Lee CM, Muthuraman P, Nguy B, Pereira T, Nejad P, Perez G, Raney BJ, Schmelter D, Speir ML, Wick BD, Zweig AS, Haussler D, Kuhn RM, Haeussler M, Kent WJ. The UCSC Genome Browser database: 2023 update. Nucleic Acids Res. 2022 Nov 24;. PMID: 36420891 -

ChIPSeeker:

Yu, Guangchuang, Li-Gen Wang, and Qing-Yu He. "ChIPseeker: an R/Bioconductor package for ChIP peak annotation, comparison and visualization." Bioinformatics 31.14 (2015): 2382-2383. -

CSAW:

Lun, Aaron TL, and Gordon K. Smyth. "csaw: a Bioconductor package for differential binding analysis of ChIP-seq data using sliding windows." Nucleic acids research 44.5 (2016): e45-e45. -

Macs2:

Gaspar, John M. "Improved peak-calling with MACS2." BioRxiv (2018): 496521. -

Homer:

Heinz S, Benner C, Spann N, Bertolino E et al. Simple Combinations of Lineage-Determining Transcription Factors Prime cis-Regulatory Elements Required for Macrophage and B Cell Identities. Mol Cell 2010 May 28;38(4):576-589. PMID: 20513432 -

Seacr:

Meers, Michael P., Dan Tenenbaum, and Steven Henikoff. "Peak calling by Sparse Enrichment Analysis for CUT&RUN chromatin profiling."Epigenetics & chromatin 12 (2019): 1-11. -

BedTools:

Quinlan, Aaron R., and Ira M. Hall. "BEDTools: a flexible suite of utilities for comparing genomic features." Bioinformatics 26.6 (2010): 841-842. -

Snakemake-Wrappers version v2.0.0

Roadmap

-

Coverage: PBC

-

Peak-annotation: CentriMo

-

Differential Peak Calling: DiffBind

-

IGV: screen-shot, igv-reports

Based on Snakemake-Wrappers version v2.0.0

Code Snippets

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | __author__ = "Thibault Dayris" __copyright__ = "Copyright 2023, Thibault Dayris" __email__ = "[email protected]" __license__ = "MIT" from snakemake.shell import shell log = snakemake.log_fmt_shell(stdout=True, stderr=True) extra = snakemake.params.get("extra", "") blacklist = snakemake.input.get("blacklist", "") if blacklist: extra += f" --blackListFileName {blacklist} " out_file = snakemake.output[0] if out_file.endswith(".bed"): extra += " --BED " shell( "alignmentSieve " "{extra} " "--numberOfProcessors {snakemake.threads} " "--bam {snakemake.input.aln} " "--outFile {out_file} " "{log} " ) |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 | __author__ = "Jan Forster, Felix Mölder" __copyright__ = "Copyright 2019, Jan Forster" __email__ = "[email protected], [email protected]" __license__ = "MIT" from snakemake.shell import shell ## Extract arguments extra = snakemake.params.get("extra", "") log = snakemake.log_fmt_shell(stdout=True, stderr=True) if len(snakemake.input) > 1: if all(f.endswith(".gz") for f in snakemake.input): cat = "zcat" elif all(not f.endswith(".gz") for f in snakemake.input): cat = "cat" else: raise ValueError("Input files must be all compressed or uncompressed.") shell( "({cat} {snakemake.input} | " "sort -k1,1 -k2,2n | " "bedtools merge {extra} " "-i stdin > {snakemake.output}) " " {log}" ) else: shell( "( bedtools merge" " {extra}" " -i {snakemake.input}" " > {snakemake.output})" " {log}" ) |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | __author__ = "Jan Forster" __copyright__ = "Copyright 2021, Jan Forster" __email__ = "[email protected]" __license__ = "MIT" import os from snakemake.shell import shell log = snakemake.log_fmt_shell(stdout=True, stderr=True) shell( "sambamba index {snakemake.params.extra} -t {snakemake.threads} " "{snakemake.input[0]} {snakemake.output[0]} " "{log}" ) |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | __author__ = "Jan Forster" __copyright__ = "Copyright 2021, Jan Forster" __email__ = "[email protected]" __license__ = "MIT" import os from snakemake.shell import shell log = snakemake.log_fmt_shell(stdout=True, stderr=True) shell( "sambamba merge {snakemake.params.extra} -t {snakemake.threads} " "{snakemake.output[0]} {snakemake.input} " "{log}" ) |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | __author__ = "Jan Forster" __copyright__ = "Copyright 2021, Jan Forster" __email__ = "[email protected]" __license__ = "MIT" import os from snakemake.shell import shell in_file = snakemake.input[0] extra = snakemake.params.get("extra", "") log = snakemake.log_fmt_shell(stdout=False, stderr=True) if in_file.endswith(".sam") and ("-S" not in extra or "--sam-input" not in extra): extra += " --sam-input" shell( "sambamba view {extra} -t {snakemake.threads} " "{snakemake.input[0]} > {snakemake.output[0]} " "{log}" ) |

18 19 | script: "../../scripts/chipseeker/chipseeker_plot_gene_body.R" |

39 40 | script: "../../scripts/chipseeker/chipseeker_plot_gene_body.R" |

17 18 | script: "../../scripts/chipseeker/chipseeker_covplot.R" |

37 38 | script: "../../scripts/chipseeker/chipseeker_covplot.R" |

18 19 | script: "../../scripts/chipseeker/chipseeker_annotate.R" |

44 45 | script: "../../scripts/chipseeker/chipseeker_annotate.R" |

22 23 | script: "../../scripts/chipseeker/chipseeker_plot_annobar.R" |

42 43 | script: "../../scripts/chipseeker/chipseeker_plot_annobar.R" |

66 67 | script: "../../scripts/chipseeker/chipseeker_plot_annobar_lsit.R" |

22 23 | script: "../../scripts/chipseeker/chipseeker_plot_distance_tss.R" |

42 43 | script: "../../scripts/chipseeker/chipseeker_plot_distance_tss.R" |

66 67 | script: "../../scripts/chipseeker/chipseeker_plot_distance_tss_list.R" |

17 18 | script: "../../scripts/chipseeker/chipseeker_plot_upsetvenn.R" |

37 38 | script: "../../scripts/chipseeker/chipseeker_plot_upsetvenn.R" |

17 18 | script: "../../scripts/chipseeker/chipseeker_make_tagmatrix.R" |

37 38 | script: "../../scripts/chipseeker/chipseeker_make_tagmatrix.R" |

19 20 21 22 23 24 25 26 27 | shell: "annotatePeaks.pl " "{input.peak} " "{params.genome} " "-cpu {threads} " "-wig {input.wig} " "{params.extra} " "> {output.annotations} " "2> {log} " |

17 18 | script: "../../scripts/csaw/csaw_fregment_length.R" |

37 38 | script: "../../scripts/csaw/csaw_count.R" |

63 64 | script: "../../scripts/csaw/csaw_filter.R" |

41 42 43 44 45 46 47 | shell: "multiBigwigSummary bins {params.extra} " "--bwfiles {input.bw} " "--outFileName {output.bw} " "--blackListFileName {input.blacklist} " "--numberOfProcessors {threads} " "> {log} 2>&1 " |

17 18 | script: "../../scripts/medip-seq/medips_meth.R" |

18 19 20 21 22 | shell: "plotCorrelation --corData {input.bw} " "--plotFile {output.png} " "--outFileCorMatrix {output.stats} " "{params.extra} > {log} 2>&1 " |

18 19 20 21 22 | shell: "plotPCA {params.extra} --corData {input.bw} " "--plotFile {output.png} " "--outFileNameData {output.stats} " "> {log} 2>&1 " |

20 21 | script: "../../scripts/csaw/csaw_normalize.R" |

46 47 | script: "../../scripts/csaw/csaw_edger.R" |

17 18 | script: "../../scripts/medip-seq/medips_edger.R" |

15 16 | wrapper: "v2.0.0/bio/sambamba/merge" |

19 20 | shell: "cat {params} {input} > {output} 2> {log}" |

41 42 | shell: "sort {params} {input} > {output} 2> {log}" |

59 60 | wrapper: "v2.0.0/bio/bedtools/merge" |

22 23 | script: "../../scripts/factorfootprints/factor_footprints.R" |

47 48 | script: "../../scripts/factorfootprints/factor_footprints.R" |

18 19 | script: "../../scripts/csaw/csaw_readparam.R" |

15 16 | script: "../../scripts/misc/rename.py" |

33 34 | script: "../../scripts/misc/rename.py" |

31 32 33 34 35 36 37 38 39 40 | shell: "xenome index {params.extra} " "--num-threads {threads} " "--max-memory {resources.mem_mb} " "--prefix {params.prefix} " "--graft {input.human} " "--host {input.mouse} " "--log-file {log.tool} " "--tmp-dir {resources.tmpdir} " "> {log.general} 2>&1 " |

19 20 | shell: "cut {params.cut} {input} | sort {params.sort} > {output} 2> {log}" |

39 40 41 42 43 44 45 | shell: "bedtools intersect " "{params.extra} " "-g {input.genome} " "-abam {input.left} " "-b {input.right} " "> {output} 2> {log}" |

22 23 24 25 26 27 28 29 30 31 32 | shell: "computeGCBias " "--bamfile {input.bam} " "--genome {input.genome} " "--GCbiasFrequenciesFile {output.freq} " "--effectiveGenomeSize {params.genome_size} " "--blackListFileName {input.blacklist} " "--numberOfProcessors {threads} " "--biasPlot {output.plot} " "{params.extra} " "> {log} 2>&1 " |

55 56 57 58 59 60 61 62 63 64 | shell: "correctGCBias " "--bamfile {input.bam} " "--genome {input.genome} " "--GCbiasFrequenciesFile {input.freq} " "--correctedFile {output} " "--effectiveGenomeSize {params.genome_size} " "--numberOfProcessors {threads} " "{params.extra} " "> {log} 2>&1 " |

17 18 | wrapper: "master/bio/deeptools/alignmentsieve" |

73 74 | wrapper: "master/bio/deeptools/alignmentsieve" |

17 18 | wrapper: "v2.0.0/bio/sambamba/view" |

39 40 | shell: "sed {params} {input} > {output} 2> {log}" |

59 60 | wrapper: "v2.0.0/bio/sambamba/view" |

81 82 | shell: "awk --file {params.awk} {input} > {output} 2> {log}" |

103 104 | shell: "sed {params} {input} > {output} 2> {log}" |

126 127 | shell: "cat {params.extra} {input.header} {input.reads} > {output} 2> {log}" |

146 147 | wrapper: "v2.0.0/bio/sambamba/view" |

182 183 | wrapper: "v2.0.0/bio/sambamba/index" |

202 203 | wrapper: "v2.0.0/bio/sambamba/view" |

224 225 | shell: "sed {params} {input} > {output} 2> {log}" |

244 245 | wrapper: "v2.0.0/bio/sambamba/view" |

266 267 | shell: "sed {params} {input} > {output} 2> {log}" |

288 289 | shell: "awk --file {params.awk} {input} > {output} 2> {log}" |

311 312 | shell: "cat {params.extra} {input.header} {input.reads} > {output} 2> {log}" |

331 332 | wrapper: "v2.0.0/bio/sambamba/view" |

367 368 | wrapper: "v2.0.0/bio/sambamba/index" |

15 16 | wrapper: "v2.0.0/bio/sambamba/merge" |

33 34 | wrapper: "v2.0.0/bio/sambamba/index" |

22 23 24 25 26 27 28 29 | shell: "bamPEFragmentSize " "{params.extra} " "--bamfiles {input.bam} " "--histogram {output.hist} " "--numberOfProcessors {threads} " "--blackListFileName {input.blacklist} " "> {output.metrics} 2> {log} " |

15 16 | script: "../../scripts/rsamtools/create_bam_file.R" |

35 36 | script: "../../scripts/rsamtools/create_design.R" |

27 28 29 30 31 32 33 34 35 36 | shell: "xenome classify {params.extra} " "--log-file {log.tool} " "--num-threads {threads} " "--tmp-dir {resources.tmpdir} " "--max-memory {resources.mem_mb} " "--output-filename-prefix {params.out_prefix} " "--prefix {params.idx_prefix} " "--fastq-in {input.r1} --fastq-in {input.r2} " "> {log.general} 2>&1 " |

64 65 66 67 68 69 70 71 72 73 | shell: "xenome classify {params.extra} " "--log-file {log.tool} " "--num-threads {threads} " "--tmp-dir {resources.tmpdir} " "--max-memory {resources.mem_mb} " "--output-filename-prefix {params.out_prefix} " "--prefix {params.idx_prefix} " "--fastq-in {input.reads} " "> {log.general} 2>&1 " |

17 18 | script: "../../scripts/medips_load.R" |

37 38 | script: "../../scripts/medips_coupling.R" |

17 18 | shell: "awk --file {params.script} {input} > {output} 2> {log}" |

40 41 42 43 44 45 46 47 48 | shell: "findMotifsGenome.pl " "{input.peak} " "{params.genome} " "{params.output_directory} " "-size {params.size} " "{params.extra} " "-p {threads} " "> {log} 2>&1 " |

37 38 | shell: "rsync {params} {input} {output} > {log} 2>&1" |

77 78 | shell: "rsync {params} {input} {output} > {log} 2>&1" |

98 99 | shell: "awk --file {params.script} {input} > {output} 2> {log}" |

17 18 19 | shell: "NB=$(sambamba view {params} {input}) && " 'echo -e "{wildcards.sample}\t${{NB}}" > {output} 2> {log}' |

38 39 40 | shell: "NB=$(sambamba view {params} {input}) && " 'echo -e "{wildcards.sample}\t${{NB}}" > {output} 2> {log}' |

64 65 | shell: "paste - - <(cat {input.called}) <(cat {input.total}) > {output} 2> {log}" |

84 85 | shell: "awk --file {params.script} {input} > {output} 2> {log}" |

35 36 37 38 39 | shell: "SEACR_1.3.sh " "{input.exp_bg} " "{params.extra} " "> {log} 2>&1" |

58 59 | shell: "awk --file {params.script} {input} > {output} 2> {log}" |

18 19 | shell: "wget {params.extra} {params.address} -O {output} > {log} 2>&1" |

39 40 | shell: "wget {params.extra} {params.address} -O {output} > {log} 2>&1" |

60 61 | shell: "wget {params.extra} {params.address} -O {output} > {log} 2>&1" |

81 82 | shell: "wget {params.extra} {params.address} -O {output} > {log} 2>&1" |

21 22 23 | shell: "wget {params} --directory-prefix {output} " "{params.url} > {log} 2>&1" |

46 47 48 | shell: "wget {params} --directory-prefix {output} " "{params.url} > {log} 2>&1" |

10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 | log_file <- file(snakemake@log[[1]], open = "wt") sink(log_file) sink(log_file, type = "message") # Load libraries base::library(package = "ChIPseeker", character.only = TRUE) base::library(package = "org.Hs.eg.db", character.only = TRUE) base::library(package = "org.Mm.eg.db", character.only = TRUE) base::library(package = "EnsDb.Hsapiens.v86", character.only = TRUE) base::library(package = "EnsDb.Mmusculus.v79", character.only = TRUE) base::message("Libraries loaded") organism <- "hg38" if ("organism" %in% base::names(x = snakemake@params)) { organism <- base::as.character(x = snakemake@params[["organism"]]) } organism <- base::tolower(x = organism) if (organism == "hg38") { anno_db <- "org.Hs.eg.db" edb <- EnsDb.Hsapiens.v86 } else if (organism == "mm10") { anno_db <- "org.Mm.eg.db" edb <- EnsDb.Mmusculus.v79 } else { base::stop("Unknown organism annotation") } seqlevelsStyle(edb) <- "Ensembl" ranges <- NULL if ("ranges" %in% base::names(x = snakemake@input)) { ranges <- base::readRDS(file = base::as.character(x = snakemake@input[["ranges"]])) } else { ranges <- ChIPseeker::readPeakFile( peakfile = base::as.character(x = snakemake@input[["bed"]]), as = "GRanges" ) } base::message("Parameters and data loaded") # Building command line extra <- "peak = ranges, annoDb = anno_db, TxDb = edb" if ("extra" %in% base::names(x = snakemake@params)) { extra <- base::paste( extra, base::as.character(x = snakemake@params[["extra"]]), sep = ", " ) } command <- base::paste0( "ChIPseeker::annotatePeak(", extra, ")" ) base::message("Command line used:") base::message(command) # Annotating annotation <- base::eval(base::parse(text = command)) base::message("Data annotated") # Saving results base::saveRDS( object = annotation, file = base::as.character(x = snakemake@output[["rds"]]) ) base::message("RDS saved") utils::write.table( base::as.data.frame(annotation), sep = "\t", col.names = TRUE, row.names = TRUE, file = base::as.character(x = snakemake@output["tsv"]) ) base::message("Provess over") # Proper syntax to close the connection for the log file # but could be optional for Snakemake wrapper base::sink(type = "message") base::sink() |

10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 | log_file <- file(snakemake@log[[1]], open = "wt") sink(log_file) sink(log_file, type = "message") base::message("Logging defined") # Load libraries base::library(package = "ChIPseeker", character.only = TRUE) base::message("Libraries loaded") peak <- NULL if ("bed" %in% base::names(x = snakemake@input)) { peak <- ChIPseeker::readPeakFile( peakfile = base::as.character(x = snakemake@input[["bed"]]), as = "GRanges", header = FALSE ) } else { peak <- base::readRDS( file = base::as.character(x = snakemake@input[["ranges"]]) ) } base::message("Ranges loaded") # Build plot grDevices::png( filename = snakemake@output[["png"]], width = 1024, height = 768, units = "px", type = "cairo" ) ChIPseeker::covplot(peak, weightCol = "V5") dev.off() base::message("Plot saved") # Proper syntax to close the connection for the log file # but could be optional for Snakemake wrapper base::sink(type = "message") base::sink() |

10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 | log_file <- file(snakemake@log[[1]], open = "wt") sink(log_file) sink(log_file, type = "message") base::message("Logging defined") # Load libraries base::library(package = "ChIPseeker", character.only = TRUE) base::library( package = "TxDb.Hsapiens.UCSC.hg38.knownGene", character.only = TRUE ) base::library( package = "TxDb.Mmusculus.UCSC.mm10.knownGene", character.only = TRUE ) base::message("Libraries loaded") # Load peaks if ("bed" %in% base::names(x = snakemake@input)) { peaks <- base::as.character(x = snakemake@input[["bed"]]) } else if ("ranges" %in% base::names(x = snakemake@input)) { peaks <- base::readRDS( file = base::as.character(x = snakemake@input[["ranges"]]) ) } base::message("Peaks loaded") # Select transcript database txdb <- TxDb.Hsapiens.UCSC.hg38.knownGene if ("organism" %in% base::names(x = snakemake@params)) { if (snakemake@params[["organism"]] == "mm10") { organism <- TxDb.Mmusculus.UCSC.mm10.knownGene } } seqlevelsStyle(txdb) <- "Ensembl" # Acquire promoter position promoter <- ChIPseeker::getPromoters( TxDb = txdb, upstream = 3000, downstream = 3000, by = 'gene' ) # Build tagmatrix tagMatrix <- ChIPseeker::getTagMatrix( peak = peaks, windows = promoter ) base::message("TagMatrix built") # Save object base::saveRDS( file = base::as.character(x = snakemake@output[["rds"]]), object = tagMatrix ) # Proper syntax to close the connection for the log file # but could be optional for Snakemake wrapper base::sink(type = "message") base::sink() base::message("Process over") |

10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | log_file <- file(snakemake@log[[1]], open = "wt") sink(log_file) sink(log_file, type = "message") base::message("Logging defined") # Load libraries base::library(package = "ChIPseeker", character.only = TRUE) base::message("Libraries loaded") ranges <- base::readRDS( file = base::as.character(x = snakemake@input[["ranges"]]) ) base::message("Ranges loaded") # Build plot grDevices::png( filename = snakemake@output[["png"]], width = 1024, height = 384, units = "px", type = "cairo" ) ChIPseeker::plotAnnoBar(ranges) dev.off() base::message("Plot saved") # Proper syntax to close the connection for the log file # but could be optional for Snakemake wrapper base::sink(type = "message") base::sink() |

11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 | log_file <- file(snakemake@log[[1]], open = "wt") sink(log_file) sink(log_file, type = "message") base::message("Logging defined") # Load libraries base::library(package = "ChIPseeker", character.only = TRUE) base::message("Libraries loaded") ranges_list <- base::lapply( snakemake@input[["ranges"]], function(range_path) { base::readRDS( file = base::as.character(x = range_path) ) } ) base::message("Ranges loaded") # Build plot grDevices::png( filename = snakemake@output[["png"]], width = 1024, height = 384, units = "px", type = "cairo" ) ChIPseeker::plotDistToTSS(ranges_list) dev.off() base::message("Plot saved") # Proper syntax to close the connection for the log file # but could be optional for Snakemake wrapper base::sink(type = "message") base::sink() |

10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | log_file <- file(snakemake@log[[1]], open = "wt") sink(log_file) sink(log_file, type = "message") base::message("Logging defined") # Load libraries base::library(package = "ChIPseeker", character.only = TRUE) base::message("Libraries loaded") ranges <- base::readRDS( file = base::as.character(x = snakemake@input[["ranges"]]) ) base::message("Ranges loaded") # Build plot grDevices::png( filename = snakemake@output[["png"]], width = 1024, height = 384, units = "px", type = "cairo" ) ChIPseeker::plotDistToTSS(ranges) dev.off() base::message("Plot saved") # Proper syntax to close the connection for the log file # but could be optional for Snakemake wrapper base::sink(type = "message") base::sink() |

10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 | log_file <- file(snakemake@log[[1]], open = "wt") sink(log_file) sink(log_file, type = "message") base::message("Logging defined") # Load libraries base::library(package = "ChIPseeker", character.only = TRUE) base::library( package = "TxDb.Hsapiens.UCSC.hg38.knownGene", character.only = TRUE ) base::library( package = "TxDb.Mmusculus.UCSC.mm10.knownGene", character.only = TRUE ) ncpus <- base::as.numeric(snakemake@threads[[1]]) mc.cores <- ncpus base::message("Libraries loaded") ranges <- NULL if ("bed" %in% base::names(x = snakemake@input)) { bed_path <- base::as.character(x = snakemake@input[["bed"]]) ranges <- ChIPseeker::readPeakFile( peakfile = bed_path, as = "GRanges", header = FALSE ) } else if ("ranges" %in% base::names(x = snakemake@input)) { ranges <- base::readRDS( file = base::as.character(x = snakemake@input[["ranges"]]) ) } base::message("Peaks/Ranges loaded") tag_matrix <- base::readRDS( file = base::as.character(x = snakemake@input[["tagmatrix"]]) ) upstream <- base::attr(x = tag_matrix, which = "upsteam") downstream <- base::attr(x = tag_matrix, which = "downstream") base::message("Tagmatrix loaded") # The default resample value resample <- 500 window_number <- base::length(tag_matrix) # Search all prime factors for the given number of windows prime_factors <- function(x) { factors <- c() last_prime <- 2 while(x >= last_prime){ if (! x %% last_prime) { factors <- c(factors, last_prime) x <- x / last_prime last_prime <- last_prime - 1 } last_prime <- last_prime + 1 } base::return(factors) } primes <- base::as.data.frame(x = base::table(prime_factors(x = window_number))) primes$Var1 <- base::as.integer(base::as.character(primes$Var1)) primes <- primes$Var1 ^ primes$Freq # Closest prime to default resample value primes_min <- base::abs(primes - resample) resample <- primes[base::which(primes_min == base::min(primes_min))] base::message("Resampling value: ", resample) txdb <- TxDb.Hsapiens.UCSC.hg38.knownGene if ("organism" %in% base::names(x = snakemake@params)) { if (snakemake@params[["organism"]] == "mm10") { organism <- TxDb.Mmusculus.UCSC.mm10.knownGene } } base::message("Organism library available") # Build plot grDevices::png( filename = snakemake@output[["png"]], width = 1024, height = 768, units = "px", type = "cairo" ) ChIPseeker::plotPeakProf( peak = ranges, tagMatrix = tag_matrix, upstream = upstream, # rel(0.2), downstream = downstream, # rel(0.2), weightCol = "V5", ignore_strand = TRUE, conf = 0.95, by = "gene", type = "body", TxDb = txdb, facet = "row", nbin = 800, verbose = TRUE, resample = resample ) dev.off() base::message("Plot saved") # Proper syntax to close the connection for the log file # but could be optional for Snakemake wrapper base::sink(type = "message") base::sink() |

10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | log_file <- file(snakemake@log[[1]], open = "wt") sink(log_file) sink(log_file, type = "message") base::message("Logging defined") # Load libraries base::library(package = "ChIPseeker", character.only = TRUE) base::message("Libraries loaded") ranges <- base::readRDS( file = base::as.character(x = snakemake@input[["ranges"]]) ) base::message("Ranges loaded") # Build plot grDevices::png( filename = snakemake@output[["png"]], width = 1024, height = 768, units = "px", type = "cairo" ) ChIPseeker::upsetplot(ranges, vennpie = TRUE) dev.off() base::message("Plot saved") # Proper syntax to close the connection for the log file # but could be optional for Snakemake wrapper base::sink(type = "message") base::sink() |

10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 | log_file <- file(snakemake@log[[1]], open = "wt") sink(log_file) sink(log_file, type = "message") # Load libraries base::library(package = "BiocParallel", character.only = TRUE) base::library(package = "csaw", character.only = TRUE) base::message("Libraries loaded") if (snakemake@threads > 1) { BiocParallel::register( BiocParallel::MulticoreParam(snakemake@threads) ) options("mc.cores" = snakemake@threads) base::message("Process multi-threaded") } # Loading list of input files design <- readRDS( file = base::as.character(x = snakemake@input[["design"]]) ) frag_length <- readRDS( file = base::as.character(x = snakemake@input[["fragment_length"]]) ) # Loading filter parameters read_params <- base::readRDS( file = base::as.character(x = snakemake@input[["read_params"]]) ) base::message("Input data loaded") extra <- "bam.files = design$BamPath, param = read_params, ext=frag_length" if ("extra" %in% base::names(x = snakemake@params)) { extra <- base::paste( extra, base::as.character(x = snakemake@params[["extra"]]), sep = ", " ) } command <- base::paste( "csaw::windowCounts(", extra, ")" ) base::message("Count command line:") base::print(command) # Counting reads over sliding window counts <- base::eval(base::parse(text = command)) base::message( "Number of extended reads ", "overlapping a sliding window ", "at spaced positions across the ", "genome, acquired." ) # Saving results base::saveRDS( object = counts, file = snakemake@output[["rds"]] ) base::print(counts$totals) base::message("RDS saved") if ("ranges" %in% base::names(snakemake@output)) { if (base::endsWith(x = snakemake@output[["ranges"]], suffix = ".RDS")) { base::saveRDS( object = rowRanges(x = counts), file = snakemake@output[["ranges"]] ) } else { utils::write.table( x = base::as.data.frame(rowRanges(x = counts)), file = snakemake@output[["ranges"]], sep = "\t", col.names = TRUE, row.names = TRUE ) } } # base::message("Total number of ranges counted: ") # base::print(counts$totals) base::message("Process over.") # Proper syntax to close the connection for the log file # but could be optional for Snakemake wrapper base::sink(type = "message") base::sink() |

11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 | log_file <- file(snakemake@log[[1]], open = "wt") sink(log_file) sink(log_file, type = "message") # Load libraries base::library(package = "edgeR", character.only = TRUE) base::library(package = "csaw", character.only = TRUE) base::message("Libraries loaded") counts <- base::readRDS( file = base::as.character(x = snakemake@input[["counts"]]) ) y <- csaw::asDGEList(object = counts) base::message("Normalized-filtered counts loaded") exp_design <- utils::read.table( file = base::as.character(x = snakemake@input[["design"]]), sep = "\t", header = TRUE, stringsAsFactors = FALSE ) design <- stats::model.matrix( object = stats::as.formula(snakemake@params[["formula"]]), data = exp_design ) base::message("Model matrix built") prior.n <- edgeR::getPriorN( y = y, design = design, prior.df = 20 ) base::message("Prior N estimated with 20 dof") y <- edgeR::estimateDisp( y = y, design = design, prior.n = prior.n, ) base::message("Dispersion estimated") grDevices::png( filename = base::as.character(x = snakemake@output[["disp_png"]]), width = 1024, height = 768 ) edgeR::plotBCV(y = y) grDevices::dev.off() base::message("Dispersion plot saved") fit <- edgeR::glmQLFit( y = y, design = design, robust = TRUE ) base::message("GLM model fitted") results <- edgeR::glmQLFTest( glmfit = fit, coef = utils::tail(x = base::colnames(x = design), 1) ) base::message("Window counts tested") grDevices::png( filename = base::as.character(x = snakemake@output[["ql_png"]]), width = 1024, height = 768 ) edgeR::plotQLDisp(glmfit = results) grDevices::dev.off() base::message("Quasi-likelihood dispersion plot saved") # rowData(counts) <- base::cbind(rowData(counts), results$table) merged <- csaw::mergeResults( counts, results$table, tol = 100, merge.args = list(max.width = 5000) ) base::message("Results stored in RangedSummarizedExperiment object") # Save results if ("qc" %in% base::names(snakemake@output)) { is_significative <- summary(merged$combined$FDR <= 0.05) direction <- summary(table(merged$combined$direction[is_significative])) sig <- base::data.frame( Differentially_Expressed = is_significative[["TRUE"]], Not_Significative = is_significative[["FALSE"]], Up_Regulated = direction[["up"]], Down_Regulated = direction[["down"]] ) utils::write.table( x = sig, file = base::as.character(x = snakemake@output[["qc"]]), sep = "\t" ) base::message("QC table saved") } if ("csaw" %in% base::names(snakemake@output)) { base::saveRDS( object = counts, file = base::as.character(x = snakemake@output[["csaw"]]) ) base::message("csaw results saved") } if ("edger" %in% base::names(snakemake@ouptput)) { base::saveRDS( object = results, file = base::as.character(x = snakemake@output[["edger"]]) ) base::message("edgeR results saved") } base::message("Process over") # Proper syntax to close the connection for the log file # but could be optional for Snakemake wrapper base::sink(type = "message") base::sink() |